Introduction

In the world of computer vision and graphics, one of the long-standing challenges has been how to reconstruct the realistic 3D models from simple 2D images. Traditional photogrammetric techniques that are used for 3D reconstruction are often requiring multiple photographs that are taken from different angles, specialized hardware like depth cameras or LiDAR, and substantial manual labor.

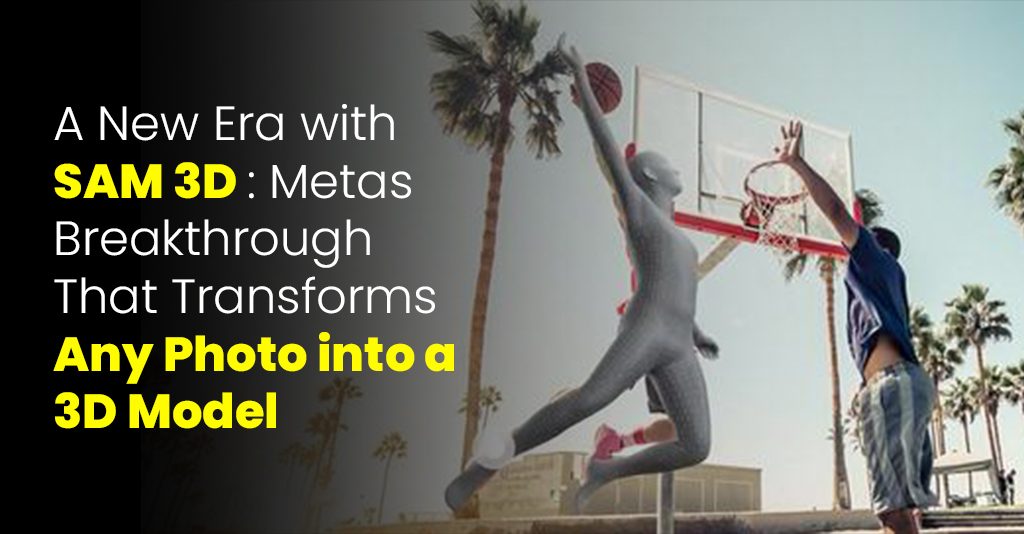

Thats why the recent launch of SAM 3D by Meta via its research group marks a major milestone. SAM 3D allows users to generate a fully textured, and pose-aware 3D reconstructions from a single ordinary image.

In simpler terms: when you upload just a single photograph (say, a photo you shot on your phone), and SAM 3D can reconstruct be it the objects or even people present in that photo as an accurate 3D model. This capability of SAM 3D will dramatically reduces the barrier to 3D content creation. This will further enable creatives, developers, researchers, and even the ordinary users to 3D-fy the world around them

What Is SAM 3D? The Two Models behind the Magic!

SAM 3D is the very newer 3D-reconstruction system from Meta AI as a part of the broader Segment Anything family that is designed to convert a single 2D image into a full 3D model whether an object, or a scene, or even a human body. Unlike the older 3D pipelines like photogrammetry, multi-view stereo, and 3D scanning that require multiple photos or specialized hardware, SAM 3D is capable of working with just only one ordinary photo thus, making 3D reconstruction far more accessible and flexible.

SAM 3D is not a single monolithic model. It is actually a pair of specialized sub-models that are tailored for various reconstruction tasks:

- 1. SAM 3D Objects: The objects are designed to reconstruct general objects and scenes (e.g. furniture, room interiors, gadgets, everyday items, and etc.) into 3D models. It will predict full 3D geometry, texture, and layout from just one image.

- 2. SAM 3D Body: It is designed to reconstruct human bodies: given a single image of a person, it estimates body pose, shape, and generates a full 3D human mesh.

Together, these two models of SAM 3D bring a comprehensive 3D understanding. You can reconstruct entire scenes that include both the objects and people useful for applications ranging from interior design to gaming, augmented reality (AR), virtual reality (VR), and many more

Why SAM 3D Is a Breakthrough?

- a. Single-Image Reconstruction

What makes SAM 3D exceptional? It is its ability to go from one flat image to a fully usable 3D model. While prior 3D reconstruction efforts may often require many images captured from multiple angles or specialized sensors, SAM 3D will work with just a single 2D photograph. Thats a radical simplification, removing hardware and logistical barriers for 3D creation.

- b. Robust to Real-World Complexity

Real-world images often include occlusions (objects partly hidden), clutter (multiple overlapping items), complex lighting, and messy backgrounds. SAM 3D is designed to handle both these SAM 3D Objects and SAM 3D Body can infer hidden geometry and reconstruct occluded or partially visible parts.

- c. High-Fidelity Geometry + Texture + Layout

SAM 3D doesnt just output a rough 2.5D shape or depth map. It produces full 3D meshes with texture and scene layout, plus pose-aware output for humans. This means the resulting 3D model can be re-rendered from any viewpoint, animated (in case of bodies), or used as a realistic asset in 3D workflows (games, AR, VR, architecture, etc.).

- d. Open-Source & Developer-Friendly

Meta has released the code, model weights, and inference pipelines for SAM 3D, making it accessible for researchers, developers, and creatives.

Moreover, SAM 3D builds on top of SAM 3, Metas newer generation segmentation model that supports rich prompts (including natural-language descriptions) for segmentation. SAM 3s mask-generation and segmentation strengths are leveraged by SAM 3D to isolate target objects/persons before reconstructing them in 3D.

Because SAM 3 supports open vocabulary prompts (e.g., describing objects by name or description rather than fixed categories), SAM 3D inherits this flexibility, you dont need predefined labels or object classes.

How SAM 3D Works (Conceptually)?

1. Single-Image Reconstruction

What makes SAM 3D exceptional? It is its ability to go from one flat image to a fully usable 3D model. While prior 3D reconstruction efforts may often require many images captured from multiple angles or specialized sensors, SAM 3D will work with just a single 2D photograph. Thats a radical simplification, removing hardware and logistical barriers for 3D creation.

2. Robust to Real-World Complexity

Real-world images often include occlusions (objects partly hidden), clutter (multiple overlapping items), complex lighting, and messy backgrounds. SAM 3D is designed to handle both these SAM 3D Objects and SAM 3D Body can infer hidden geometry and reconstruct occluded or partially visible parts.

- 3. High-Fidelity Geometry + Texture + Layout

SAM 3D doesnt just output a rough 2.5D shape or depth map. It produces full 3D meshes with texture and scene layout, plus pose-aware output for humans. This means the resulting 3D model can be re-rendered from any viewpoint, animated (in case of bodies), or used as a realistic asset in 3D workflows (games, AR, VR, architecture, etc.).

- 4. Open-Source & Developer-Friendly

Meta has released the code, model weights, and inference pipelines for SAM 3D, making it accessible for researchers, developers, and creatives.

Moreover, SAM 3D builds on top of SAM 3, Metas newer generation segmentation model that supports rich prompts (including natural-language descriptions) for segmentation. SAM 3s mask-generation and segmentation strengths are leveraged by SAM 3D to isolate target objects/persons before reconstructing them in 3D.

Because SAM 3 supports open vocabulary prompts (e.g., describing objects by name or description rather than fixed categories), SAM 3D inherits this flexibility, you dont need predefined labels or object classes.

The Technology and Training behind SAM 3D

Behind the scenes, the creators of SAM 3D tackled one of the biggest barriers in 3D research: the data barrier”. Unlike synthetic environments, where full 3D models and multi-view images are easy to generate, real-world 3D data (with accurate geometry, texture, and lighting) is rare and expensive to produce at large scale.

To solve this, their pipeline uses a human-in-the-loop data engine: humans annotate shape, texture, and pose, guiding training over real-world images. This, combined with a multi-stage training process that mixes synthetic pretraining with real-world fine-tuning, allows SAM 3D to generalize well to natural images, scenes in the wild with occlusion, clutter, and unpredictable lighting.

Thus, SAM 3D doesnt just guess geometry, it leverages learned priors from a large, diverse dataset, making reconstructions both plausible and detailed. Compared to prior single-image 3D methods, SAM 3D reportedly wins in human preference tests by a margin of roughly 5:1, a strong signal that its outputs look better and more realistic

Potential Applications: What Can You Do With SAM 3 D?

The release of SAM 3D unlocks a wide range of real-world applications. Here are some promising use-cases:

- Augmented Reality (AR) / Virtual Reality (VR): With 3D models generated from your photos, you can build AR/VR environments faster. For instance, you could scan your room furniture with a phone and visualize a redesign, or import objects into a VR scene.

- Gaming and Digital Content Creation: Artists and game-engine developers can convert real objects or people into usable 3D assets rapidly. Imagine creating game characters or in-game props directly from photos.

- E-commerce & Interior Design: Sellers could allow customers to place furniture, decor, or products in their own living space in 3D before purchase. For example, a View in Room features where a lamp, table or chair from a catalog is visualized in a buyers photo of their room. Indeed, Meta plans to use SAM 3D for such scenarios.

- Robotics and Spatial Understanding: Robots that navigate or manipulate the real world need 3D understanding. SAM 3D could offer robots and autonomous systems the ability to infer 3D structure (objects, obstacles) from a single camera image, useful for grasping, navigation, and scene understanding.

- Human Digitization, Animation & Healthcare: With SAM 3D Body, you can generate accurate 3D human meshes from photos potentially useful for animation, digital avatars, ergonomic design, sports analysis, physiotherapy, or virtual fitting rooms.

- Education and Research: Researchers studying human motion, robotics, digital twins, and scene reconstruction can leverage SAM 3Ds open-source models to build new experiments without needing expensive multi-camera rigs or LiDAR.

In short: SAM 3D fundamentally reduces the cost, time, and skills needed to generate 3D content opening up 3D modeling to a much broader audience.

Challenges & Limitations (for Now)

While SAM 3D is a major advance, its worth remembering that automatic 3D reconstruction from a single image still faces inherent limitations. Some of these acknowledged implicitly in the research include:

- Ambiguity from a Single Viewpoint: A single image necessarily misses occluded or hidden parts of objects. SAM 3D addresses this using learned priors and context inference, but in some cases predictions may still be approximate rather than perfect.

- Complexity of Scenes: Extremely cluttered scenes, highly reflective materials, or unusual lighting might degrade reconstruction quality compared to simpler, well-lit photos.

- Need for Correct Segmentation / Masking: The quality of the final 3D model heavily depends on how accurately the target object/person is segmented in 2D. While SAM 3 (underlying segmentation model) is powerful, imperfect masks could lead to poor 3D outputs.

- Generalization across Domain Gaps: Although trained on a large and diverse dataset, there might be objects or styles (e.g. fabrics, transparent objects, complex textures) for which reconstruction is still hard.

- Ethical & Privacy Considerations: Generating 3D human meshes from photos raises privacy and consent issues, especially if used without the consent of the people in the images.

Nevertheless, these limitations are common in any 3D reconstruction approach; what matters is how the new model balances tradeoffs and SAM 3D appears to do so very effectively.

What Sam 3D Means for the Future of 3D, and Why It Matters?

SAM 3D represents a paradigm shift in how humans and machines understand and reproduce the physical world. For decades, 3D modelling has been a labor-intensive, expert-driven task requiring multiple cameras, specialized setups, and manual clean-up. With SAM 3D, that barrier is greatly lowered.

The democratization of 3D means that anyone like hobbyists, small studios, independent artists, startups can now capture, recreate, and reimagine real-world objects or people in 3D with almost no specialized equipment.

For industries:

- E-commerce can offer realistic place-in-space previews for customers.

- Game development & VR/AR can accelerate asset creation.

- Robotics can leverage better spatial awareness from simple cameras.

- Content creation (films, social media) can integrate 3D assets seamlessly from everyday photos.

- Education & research can experiment with digital twins, human motion analysis, or environment reconstruction without huge budgets.

As the technology matures and improves, we might soon see a world where instead of maintaining large 3D asset libraries, creators generate 3D models on demand from everyday photos. Thats a huge shift in how we think about digital content and how we build immersive, interactive experiences.

Getting Started - How You Can Try SAM 3D?

One of the best things about SAM 3D is its accessibility. Meta has released model checkpoints and inference code, meaning you dont need to wait for closed beta or proprietary software; you (or any developer) can start experimenting today.

For quick experiments:

- Use the model via the provided open-source pipeline – mask an object/person in a 2D image, and run inference to get a 3D mesh.

- Try the web demo or playground that Meta offers (for non-technical users) – upload a regular photo and see what SAM 3D generates.

- Once you have a mesh, you can export it (e.g. .PLY or standard 3D formats), and import into 3D software, game engines, AR/VR environments, or use for further editing/animation.

For developers and researchers: SAM 3Ds open-source release encourages experimentation. You could fine-tune it, build custom pipelines, integrate with other tools (e.g. for animation, digital twin generation, robotics) the possibilities are broad and expanding.

Final Thoughts

SAM 3D by Meta is more than a new AI model; its a doorway to the 3D world for anyone with a camera and a photo. By dramatically simplifying the process of going from 2D to high-fidelity 3D, it lowers the barrier for content creation, democratizes 3D modeling, and expands whats possible in AR, VR, gaming, design, robotics, and creative workflows.

Yes, there are limitations. Single-image reconstruction will never quite replace careful multi-view photogrammetry when ultra-high fidelity or precise measurements are required. But for many practical use cases like asset creation, prototyping, visualization, creative work, SAM 3D is already more than good enough.

As the technology evolves, and as more people experiment with it, we can expect that well see a wave of innovation: new workflows, new use cases, and a rethinking of how 3D content is produced and consumed. In that sense, SAM 3D doesnt just reconstruct images; it helps reconstruct our digital future.